Advika Singh & Kaveri Dole || STAFF WRITERS

Many students in Andover High School’s competitive environment balance demanding courses alongside extracurricular activities and personal responsibilities, which can become challenging, leading students to seek out Artificial Intelligence (AI).(make lede more engaging)

As the use of technology in everyday life has become prevalent, AI tools have become more accessible, and their presence in schools has sparked many conflicts. As more students begin to use AI, the need for clear guidelines, especially in an academic setting, becomes more obvious.

Patrick Benjamin, a sophomore at AHS, noticed the impact of these unclear AI guidelines. “The AI rules for each class are different,” he said, reflecting how the fine line between misuse and assistance can feel unclear, leading to inconsistent usage across classrooms. This uncertainty may be why the use of AI persists, as students are unsure how to navigate expectations that differ from teacher to teacher.

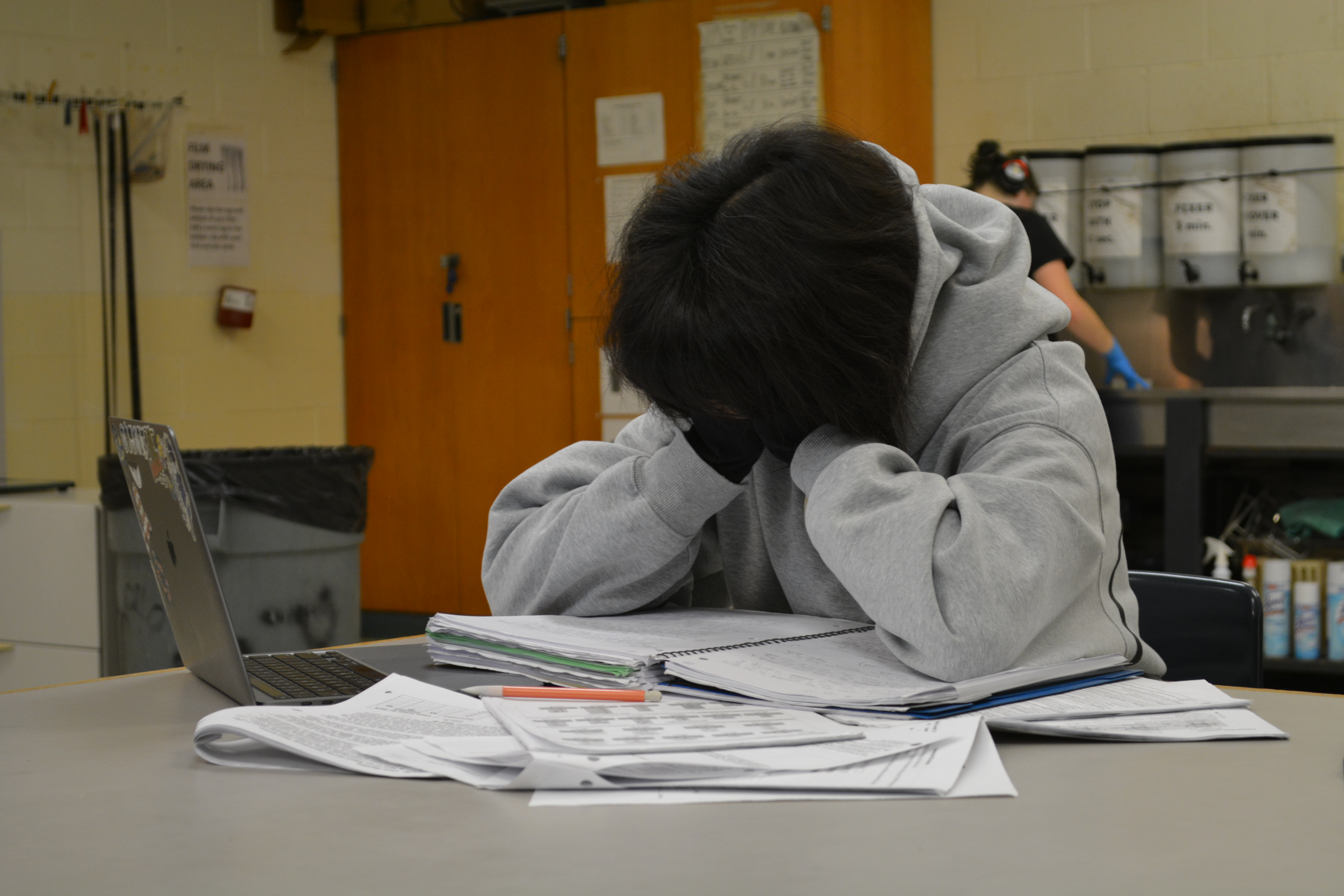

Freshman Bhavika Sharma defines stress as the main reason students turn to AI shortcuts. “Most people just get very stressed and pressured because they have so much work,” Sharma said. For students with overlapping deadlines and long nights of homework, AI can appear to offer relief by helping them complete their tasks. This can refer to streamlining certain tedious tasks, or direct plagiarism, something teachers are becoming increasingly wary of.

Guidance counselor Kimberly Bergey also noticed the impact of rigorous schedules on students. “A lot of students feel like there aren’t enough hours in the day,” Bergey said. At a competitive school like AHS, pressure in school can be abundant. The average AP score in AHS is a 3.66, with the national average being a 3.06. These heightened expectations often fall on the shoulders of students who strive for academic achievement.

However, irresponsible AI use is not the solution. “ I think cheating prevents you from retaining information, and it can negatively affect you in the future,” said Latin teacher Laura Jordan. AI use can include using it to write entire essays or assignments and submitting it as your own, using it to answer test questions in real time, having AI summaries replace the original text, and building off of AI research without fact checking. Although many factors can affect a students’ decision to use AI, ultimately the choice is the student’s which unfortunately often results in abuse.

On the other hand, AI isn’t always sought out as a shortcut, but rather from curious students using it as a resource instead of as a means of plagiarism. “I will occasionally get AI to create practice problems for me resulting in me scoring better in tests,” said junior Selina Amere. Additional responsible AI use can include but is not limited to getting explanations of difficult topics, asking for hints or feedback rather than full answers, summarizing notes you have already written, and AI text to speech or speech to text tools.